Crosswords -- Bill Moorier

I've been interested in computer-generated crosswords for a long time, and have written several computer programs of my own over the years to generate crosswords. Recently, with the addition of modern AI techniques, I've started to feel like the output is getting quite good -- perhaps even beginning to rival something that a human would create. I don't know of any other attempt at fully-automated crossword generation whose output is this good (if you know of one, I would love to hear about it!).

You can find my most-recent attempts here.

If you simply ask a modern AI (like ChatGPT, for example) to generate a crossword for you, at the time of writing (2024-12-21) the results are not good. I've tried with all the models I can get my hands on, and have never managed to get anything usable out of them.

But a combination of old-school computer science and modern AI can be very successful!

I start with a huge wordlist, which I compiled from a number of sources on the internet. This is just a giant list of English words and phrases. Recently I've been asking AI models to tell me which entries in the list are the most obscure, so that I can consider deleting them (but this is still a work-in-progress).

Next comes my grid-generator. If you want to generate a nice crossword you have to start with a good-looking grid. American audiences tend to expect 180-degree rotational symmetry, for example. My grid-generator starts with a blank array of white squares, 7×7, 11×11, 13×13, or 15×15. Then it randomly colors squares black, in pairs, to maintain 180-degree symmetry. The process isn't completely random though. There's a small bias toward the horizontal and vertical axes in the middle of the grid, and another small bias toward even-numbered coordinates (if you start numbering at 1). I've found these two biases to both make the next step more likely to succeed.

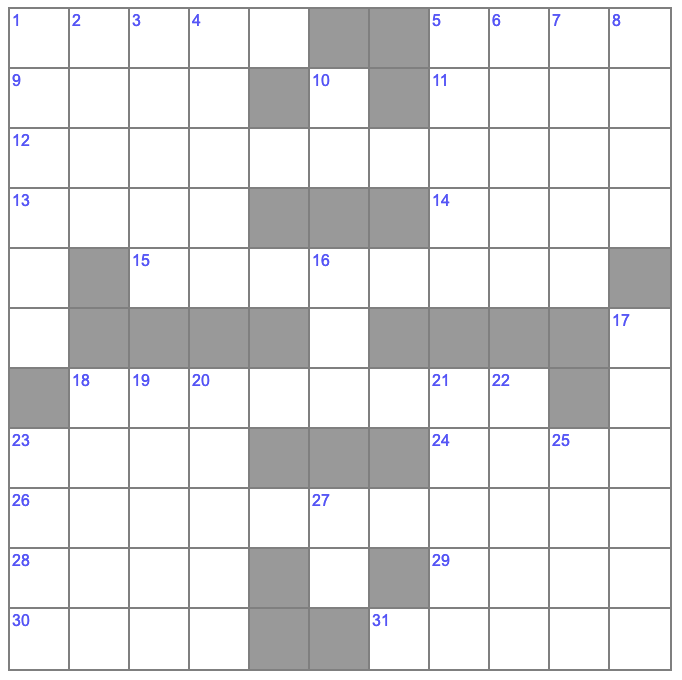

Exaggerating the first bias will get you a grid like this, where most of the black squares are very close to either the horizontal or the vertical axes:

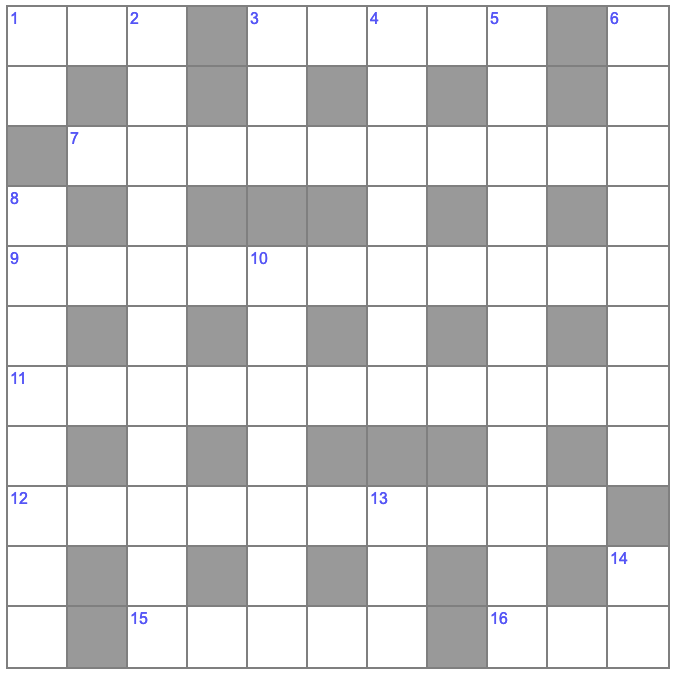

On the other hand, exaggerating the second bias will get you a grid like this, with clues "criss-crossing" each other. I think of this as more of a "British-style" grid:

With both of these biases present (but turned-down a bit from the above grids), we keep coloring squares black until some target "density" is reached (again I've set that number empirically to make the next step more likely to succeed).

Once we have a grid, we try to fill it with words! I use simple backtracking search for that, with a timeout to stop the search on grids that are likely impossible to fill. In practice it's easy to generate a new filled grid from scratch about once every two minutes.

After the grid is full of words, we use an LLM to generate some clues. I've iterated over many models and prompts for this. I think the current performance is quite good, but far from perfect. One noticeable defect is that the models I've tried tend to not pay close enough attention to the instruction never to use the solution word anywhere in the clue. In particular they struggle when the solution is an acronym (e.g. sometimes a clue for "LOL" will be something like "Laughing out loud online"!). I've written some code to do some post-processing to avoid and correct things like this, but that's a work-in-progress and there's still plenty of low-hanging fruit for me to tackle.

That's it for now (please do try a crossword or two and let me know what you think!). I have plenty of other ideas I want to work on in this space. Excitingly some of them involve "themed" crosswords, which are quite a big step-up in difficulty for crossword generation.